CDLI Data Structure

Main page of CDLI is here.

For searching in CDLI database, information is here.

All the texts displayed in CDLI with a translation are here.

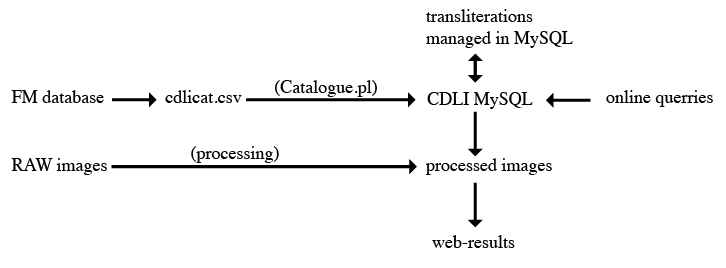

CDLI data consists of three main components: catalogue, transliterations, and images. All public data are available here.

Catalogue

Main catalogue: FileMaker database (cdli_cat), manual export to csv (cdlicat.csv), and uploaded to folder (cdli_web) where perl script (Catalogue.pl) resides that enters (command) cdlicat.csv to cdli MySQL on server (cdli_sql).

Online search: Search functions of the website are powered by a MySQL database (more). mysql database performs automatic dumps to server of four most recent dbs (done shortly after midnight), .tar.gz export to backup.

Transliterations

Transliterations entirely managed by mysql database (cdli_sql); nightly backup by script (name?), in two copies: cdliatf.atf in transliterations, and cdliatfDATE.atf in transliterations/datedBackups.

Images

Processed images and thumbnails posted to server (cdli_web), these files are automatically picked up by MySQL for search results display.

Data flow

CDLI and CIDOC:CRM

The Web as we know it today is (essentially) a hypertext system of interconnected, human-readable pages - it is a Web of Documents. Adoption and utilisation of semantic technologies and the Linked (Open) Data publication paradigm can, however, help bring forth and support the emergence of a Web of Data, one where instances and resources are the nodes in a network, all expressed in machine-readable formats. The use of semantic technologies can help open up datasets, improve the dissemination of information, enrich data streams and facilitate he discovery of previously unknown, implicit knowledge from explicitly declared facts.

The guidelines for best-practice are well-known as the Five Star criteria of Linked Data: information is to be published online, in machine-readable and non-proprietary formats. The use of http://URIs as discoverable names and connections, expressed through standards such as RDF and SPARQL, with links to further resources.

In practical terms, the publication of legacy data in such a machine-readable format consists of three main stages of development and implementation. Firstly, the data is converted to RDF - for this, there are various Open Access tools which can be used with relative ease even with little technical experience or know-how, such as Open Refine and Karma, both of which provide a graphical interface which allows the user to tidy up and output legacy data as RDF. Karma allows users to import their own ontologies, and to map their data according to whichever formalised structure they have chosen as best suited to efficiently and effectively represent the domain-knowledge inherent in their dataset.

Secondly, the generated triples need to be stored on a server (mnemonically conveniently named "triple stores"). Although there are several commercial options available, many projects in the Digital Humanities have opted for Virtuoso. Other options include D2R, example 2 and example 3, with links.

Thirdly, RDF triples are queried through a SPARQL endpoint. These queries differ from traditional searches by collecting results beyond those that would be returned through character-matching, or hierarchical and/or relational database queries (eg. SQL) by transversing an interconnected graph of data, finding answers based on meaning and implicit inferences.

Interlinking RDF triples allow us to create a path of relevant information through large datasets, and to identify other relevant information elsewhere on the Web. Assyriological data, for example, could be enriched by other data sources from complementary datasets, other domains in the field of Archaeology and Ancient History, but also potentially with relation to information in more disparate datasets, such as environmental or astronomical data.